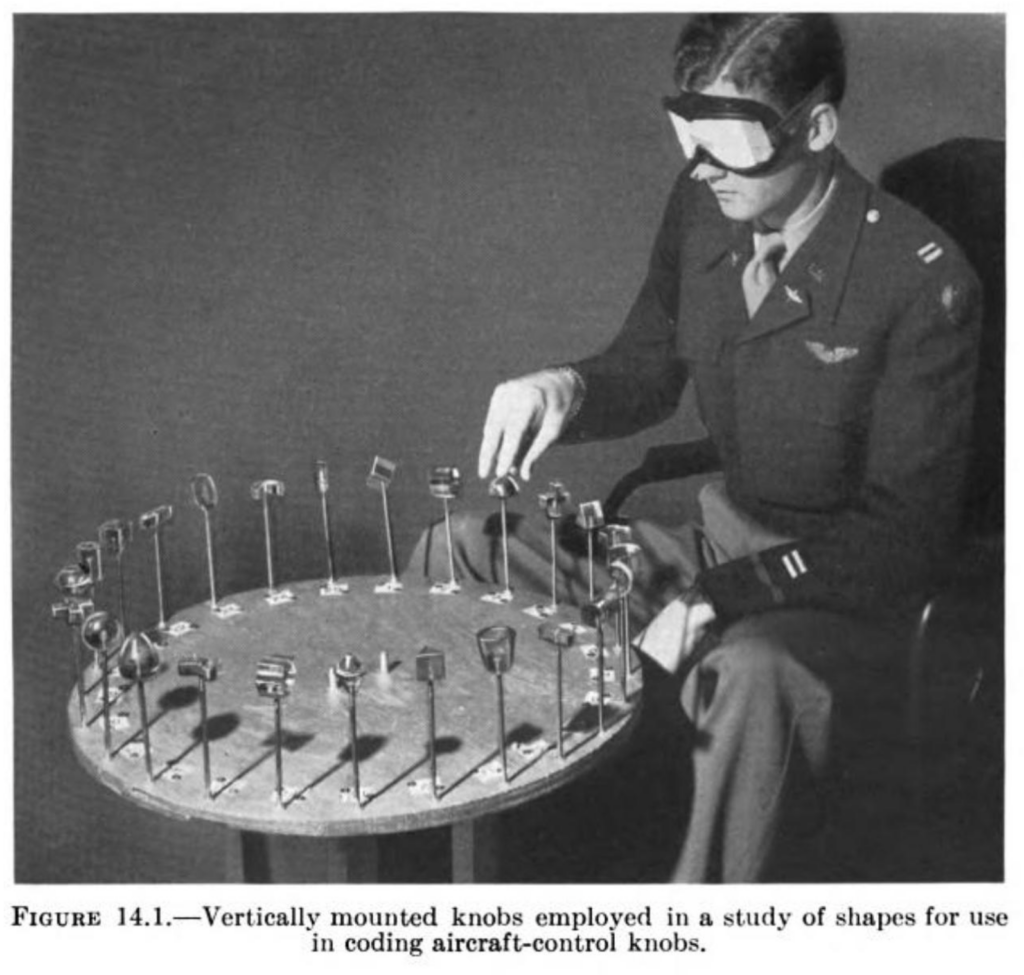

The B-17 Bomber, also known as “the flying fortress,” was a four-engine heavy bomber plane used in World War II that became famous for being able to return to base even after sustaining heavy damage. Despite their effectiveness, B-17s had an unfortunate tendency: They unexpectedly crashed on the runway. Any single crash could be attributed to pilot error; in wartime, pilots may have had less training than would be optimal or may have needed to fly even in difficult conditions. However, close inspection of the cockpits suggested another explanation (Chapanis, 1953; Fitts & Jones, 1947). In the B-17, the controls for the landing gear were right next to—and shaped identically to—the controls for the wing flaps. When pilots were trying to adjust the wing flaps, they were inadvertently retracting the landing gear, leading the planes to collapse on the tarmac. In the C-47 plane, the controls were not adjacent, and the error never occurred (Roscoe, 1997). Therefore, avoiding these catastrophic errors in the B-17 turned out to be very simple: By gluing a wheel-shaped knob to the landing gear control and a wedge-shaped knob to the wing flap control, pilots could easily distinguish them by feel. The B-17s stopped crashing.

B-17 Bomber, also known as “the flying fortress. Source: American Air Museum

When mistakes happen, it is common to ask “Who caused the error?” and adopt what James Reason (2000) refers to as the person approach toward errors. This approach assumes that errors are failures of individuals, caused by negligence, inattention, or ineptitude. The person approach would therefore advocate preventing B-17 crashes by finding better pilots.

However, the fact that this error was somewhat common—and so unexpected—led researchers to ask a different question: “What caused the error?” Rather than thinking of the B-17 crashes as human errors they chose to think of them as design errors. This mindset—now referred to as the systems approach (Reason 2000)—assumes that errors are not the causes of the problem, but rather the consequences of vulnerabilities in the system. Therefore, the solution is not to find people who never make mistakes (good luck!), it is to set up systems that are more robust to the kinds of errors humans are most likely to make.

The systems approach has become widespread in many industries in addition to aviation. Software developers know that bugs are inevitable. Entire systems are set up in the medical field to catch when the wrong drug or the wrong amount of a drug is prescribed. These industries (Bates & Gawande, 2000; Kim et al., 2021), among others (e.g., accounting; Stefaniak & Robertson, 2010), therefore actively look for ways to avoid errors and have practices in place that are explicitly designed to catch mistakes. The systems approach assumes people will make mistakes, so prioritizes creating systems to catch them.

In contrast, academic research appears to subscribe to the person approach. Stories about people finding errors in their own work typically include tremendous amounts of guilt and self-doubt: “I was still blind with worry for disappointing [my supervisor]” (Grave, 2021). They also include little discussion of the circumstances that may have led to the error. Indeed, best practices for avoiding errors in research are not a standard part of graduate training and discussion of errors is relatively rare in the discipline.

It may be tempting to think that cognitive scientists don’t talk much about ways to avoid errors because errors don’t often occur in our line of work. However, given data on self-reported frequency of errors (Kovacs et al., 2021) and analyses showing high levels of misreported statistics in the published literature (Nuijten et al., 2016), this seems unlikely. What seems more plausible is that researchers are just as human and just as error-prone as people working in aviation and medicine and accounting. The difference is that when a cognitive scientist makes a mistake in their work, it doesn’t manifest as a 65,000 pound plane collapsing on the runway.

Figure 14.1. Jenkins, W. (1947). The Tactual Discrimination of Shapes for Coding Aircraft-Type Controls. In P. M. Fitts (Ed.), Psychological Research on Equipment Design.

Reviewing the literature on error prevention across disciplines led me to a striking realization: Industries in which errors are costly and dire conceive of mistakes very differently than academic researchers do. Those industries that have far more to lose when errors occur have devoted much more time and energy to thinking about the causes and cures for human error. So what can we learn from these fields? I advocate that we adopt a systems approach and recognize that errors “are often traceable to extrinsic factors that set the individual up to fail” (Van Cott, 2018 p. 61).

As one example, in many labs, it is common practice to have a single researcher be responsible for conducting the statistical analyses and entering numbers in the results section. When that researcher accidentally forgets to remove filler trials or reverse-code items, their honest mistake is allowed to persist into publication because there is no system in place to catch it. If we acknowledge that “humans, even diligent, meticulous and highly trained professionals, make mistakes.” (Nath et al., 2006 p. 154), it seems obvious that a system that lacks any safeguards is indeed setting individuals up to fail.

To help address this issue, I’ve created Error Tight: Exercises for Lab Groups to Prevent Research Mistakes. The purpose of Error Tight is to provide hands-on exercises for lab groups to identify places in their research workflow where errors may occur and pinpoint ways to address them. The appropriate approach for a given lab will vary depending on the kind of research they do, their tools, the nature of the data they work with, and many other factors. As such, the project does not provide a set of one-size-fits-all guidelines, but rather is intended to be an exercise in self-reflection for researchers and provide resources for solutions that are well-suited to them. It is unreasonable to expect perfection from anyone, but deliberately examining the nature of our systems may reveal situations in which the controls for the landing gear and wing flaps are too close together.

References

Bates, D. W., & Gawande, A. A. (2000). Error in medicine: what have we learned? Annals of Internal Medicine, 132(9), 763–767.

Chapanis, A. (1953). Psychology and the Instrument Panel. Scientific American, 188(4), 74–84.

Fitts, P. M., & Jones, R. E. (1947). Analysis of Factors Contributing to 460 “Pilot-Error” Experiences in Operating Aircraft Controls. Dayton, OH: Aero Medical Laboratory, Air Material Command, Wright-Patterson Air Force Base. https://apps.dtic.mil/sti/pdfs/ADA800143.pdf

Grave, J. (2021). Scientists should be open about their mistakes. Nature Human Behaviour, 5(12), 1593.

Kim, G., Humble, J., Debois, P., Willis, J., & Forsgren, N. (2021). The DevOps Handbook: How to Create World-Class Agility, Reliability, & Security in Technology Organizations (Second edition). IT Revolution Press.

Kovacs, M., Hoekstra, R., & Aczel, B. (2021). The Role of Human Fallibility in Psychological Research: A Survey of Mistakes in Data Management. Advances in Methods and Practices in Psychological Science, 4(4), 25152459211045930.

Nath, S. B., Marcus, S. C., & Druss, B. G. (2006). Retractions in the research literature: misconduct or mistakes? The Medical Journal of Australia, 185(3), 152–154.

Nuijten, M. B., Hartgerink, C. H. J., van Assen, M. A. L. M., Epskamp, S., & Wicherts, J. M. (2016). The prevalence of statistical reporting errors in psychology (1985-2013). Behavior Research Methods, 48(4), 1205–1226.

Reason, J. (2000). Human error: models and management. BMJ , 320(7237), 768–770.

Roscoe, S. N. (1997). The adolescence of engineering psychology. In S. M. Casey (Ed.), Volume 1, Human Factors History Monograph Series. Human Factors and Ergonomics Society.

Stefaniak, C., & Robertson, J. C. (2010). When auditors err: How mistake significance and superiors’ historical reactions influence auditors’ likelihood to admit a mistake. International Journal of Accounting, Auditing and Performance Evaluation, 14(1), 41–55.

Van Cott, H. (2018). Human Errors: Their Causes and Reduction. In M. S. Bogner (Ed.), Human Error in Medicine (pp. 53–65). CRC Press.