By Ava Ma De Sousa

This piece was originally posted on cogbites.

An ad by Mercedes-Benz showing the popular (debunked) theory of difference in function of the right and left brain

Has anyone ever asked you if you are ‘right-brained’ or ‘left-brained’? Maybe you have even taken a Buzzfeed quiz to see which of these two sides ‘dominates’ your behavior. Though there is not much evidence to support this particular distinction (1), the question of modularity – whether there are specific regions in charge of specific functions – remains one of the biggest debates to date in cognitive science.

A specific region for recognizing faces?

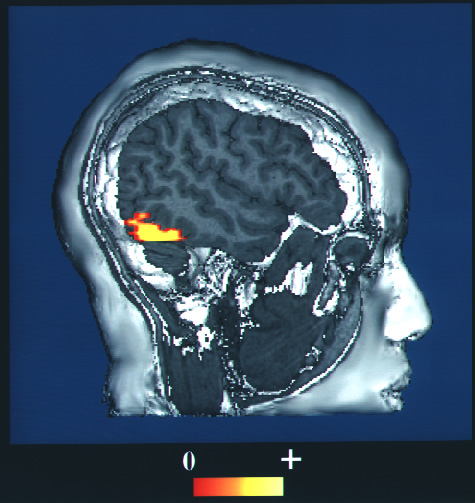

The Fusiform Face Area differs in activation when viewing images of faces versus other objects

Though some researchers firmly believe that the brain has specific modules – areas which always respond to a certain kind of input and never to other inputs (see, for example, cognitive scientist Jerry Fodor’s list of requirements) – others believe that all cognition is fully distributed throughout the brain, with no area responsible for a specific type of thought or behavior.

One region that has been the focus of much scientific argument is the ‘Fusiform Face Area’ (FFA), responsible for – you guessed it – facial perception.

Researchers discovered the brain region known as the FFA in the 1990s by putting a dozen people inside an MRI machine and scanning their brains while they looked at various images (2). The researchers discovered that one area, on the right side of the back of the head, was activated when viewing various images of faces (e.g., cartoons, faces turned to the side), but was most active when viewing a photo of a human face facing forward. When a participant was presented with photos of body parts or other objects, no activation occurred in this region.

The FFA is activated when presented with faces, but not when presented with anything else. This must mean we’ve found a face module, right?

Just because you can’t see it doesn’t mean it’s not there

Not so fast. One important criticism about the FFA comes from James Haxby (3). He argued that the method used by Kanwisher and her colleagues did not prove the FFA was picking up exclusively on information about faces, but rather that the method they were using did not have the resolution to pick up on information about other types of objects.

To investigate this further, Haxby devised a new approach to analyzing fMRI data. Instead of only looking at average activation levels in a particular brain region, they looked at finer grained information: patterns of activity within the region. This approach is now referred to as Multivariate Pattern Analysis. Haxby’s team found that the FFA showed distinct patterns of activations for objects other than faces, although activation was higher for faces than for other objects. Thus, it seems that the purported face area does carry information about other objects!

This finding still wasn’t the end of the modularity debate. Kanwisher argued that just because the information was there did not mean it was necessarily being used by the brain. The researchers tested this idea by directly stimulating the FFA. Check out the video here and see for yourself what happens!

As you can see in the video, when the participant looks at the researcher’s face (at minute 0:55 in the video), the participant’s perception changes completely. He claims the face morphs into a cartoon. However, when looking at other objects, like a box (at minute 0:10) or a ball (at minute 0:42), he claims that a face appears seemingly superimposed onto the object.

This video shows that with stimulation of the FFA, perception of faces is actually distorted, while perception of objects remains unchanged other than a flash of a face appearing on top of the object. Thus, even if the FFA contains information about objects other than faces, it seems this information is not actually being used. Thus, the FFA seems responsible solely for facial perception.

Does this mean the brain is modular?

Beyond a module for face perception, other brain regions also appear specialized for particular thoughts or behaviours. For instance, there seems to be a module for remembering places which produces hallucinations of places when stimulated (4) and another for perceiving human body parts (5).

In contrast, some other brain regions that were thought to be exclusively involved in one process are also crucial for other processes. For instance, researchers Rebecca Saxe and Nancy Kanwisher found that one brain area, the temporo-parietal junction (TPJ) is activated exclusively in a particular social context – when people are thinking about other people’s beliefs (6).

However, the TPJ also plays a key role in attention (7). Thus, it seems unlikely that the TPJ only serves to represent the beliefs of others. Rather than being a module to represent something specific like belief, or faces, many accounts are shifting towards viewing these areas as conducting a key transformation of information, or computation, such as contextual updating (8).

Are modularity and distributed processing two sides of the same coin?

Brain areas may implement specific computations while still being embedded in larger networks responsible for higher level processing

Viewing brain regions as implementing a particular task or computation points to a new conception of what modules really are. Our intuition about what may count as ‘basic cognition’ might not correspond to ‘basic processing’ for a particular brain region.

For instance, when we judge the attractiveness of someone’s face, it feels automatic – perhaps ‘facial attractiveness’ could be its own module. Yet many studies have been done on the topic and no such module has been found. Rather, researchers in the field assume that this final judgment (of facial attractiveness) is composed of many different iterations on the initial presented stimulus.

There isn’t yet full consensus on which exact transformations are performed, but some researchers believe that the brain may compute the distances between different features (eyes, nose, lips), in this way checking facial proportions (9).

One or many brain areas may be responsible for this proportional calculation. Other regions (like the FFA) would likely also be needed to recognize the face initially, then integrate the calculation with memory. Thus, these different modules come together, each implementing some kind of transformation to get from input – such as colored blobs on a screen forming the image of a face – to output – such as a judgment of attractiveness, an emotion, a memory or behaviour. When seen in this way, distributed processing and modules seem more complementary than opposed.

In the end, while neuroscience has been able to provide some answers to this debate, how exactly input becomes output is still somewhat of a mystery and remains open to interpretation.

So, did you pick a side?

Images:

1. Flickr. Accessed 05.13.2020. https://www.flickr.com/photos/phploveme/6278507184/

2. Wikimedia Commons. Accessed 05.013.2020. https://commons.wikimedia.org/wiki/File:Fusiform_face_area_face_recognition.jpg

3. Pxfuel. Accessed 05.13.2020. https://www.pxfuel.com/en/free-photo-epiri

References:

1. Nielsen, J. A., Zielinski, B. A., Ferguson, M. A., Lainhart, J. E., & Anderson, J. S. (2013). An evaluation of the left-brain vs. right-brain hypothesis with resting state functional connectivity magnetic resonance imaging. PloS One, 8(8).

2. Kanwisher, N., McDermott, J., & Chun, M. M. (1997). The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience, 17(11), 4302-4311.

3. Haxby, J. V., Gobbini, M. I., Furey, M. L., Ishai, A., Schouten, J. L., & Pietrini, P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science, 293(5539), 2425-2430.

4. Mégevand, P., Groppe, D. M., Goldfinger, M. S., Hwang, S. T., Kingsley, P. B., Davidesco, I., & Mehta, A. D. (2014). Seeing scenes: Topographic visual hallucinations evoked by direct electrical stimulation of the parahippocampal place area. Journal of Neuroscience, 34(16), 5399-5405.

5. Downing, P. E., Jiang, Y., Shuman, M., & Kanwisher, N. (2001). A cortical area selective for visual processing of the human body. Science, 293(5539), 2470-2473.

6. Saxe, R., & Kanwisher, N. (2003). People thinking about thinking people: the role of the temporo-parietal junction in “theory of mind”. Neuroimage, 19(4), 1835-1842.

7. Shomstein, S. (2012). Cognitive functions of the posterior parietal cortex: Top-down and bottom-up attentional control. Frontiers in Integrative Neuroscience, 6, 38.

8. Geng, J. J., & Vossel, S. (2013). Re-evaluating the role of TPJ in attentional control: contextual updating?. Neuroscience & Biobehavioral Reviews, 37(10), 2608-2620.

9. Shen, H., Chau, D. K., Su, J., Zeng, L. L., Jiang, W., He, J., … & Hu, D. (2016). Brain responses to facial attractiveness induced by facial proportions: evidence from an fMRI study. Scientific Reports, 6(1), 1-13.